DIY-VR: SENS VR Report

For my presentation with Nai-Chen, we chose the title S.E.N.S. VR – a virtual reality experience with graphic novel aesthetics. A mystery told in three ten-minute parts, the user follows (and sometimes becomes) a film noir detective as he follows a series of clues across a bleak, geometric landscape. The piece was inspired and created in collaboration with the graphic novelist Marc Antoine Mathieu.

At first glance, what sets SENS VR apart from other VR experiences is its minimal visual design and the piece succeeds because it commits to a strong aesthetic. Rather than work to make the user feel that they’re in a real location, the creators play with how simple lines and shadows can create a sense of space and movement. Like an M.C. Escher drawing, dimensions expand and collapse depending on how you look at a line. It also recognizes VRs potential to play with concepts of space and the ability to break rules, particularly those related to perception and physics. Shifts in scale, line, and color open up potential new realities to explore that didn’t exist the moment before.

The interaction itself is fairly simple, making use of the reticle and wayfinding. To move to the next location, the user looks across the space they’re in for some kind of object or sign that will launch them to another location. The piece also plays around with expectations of the user’s role. Sometimes you seem to play the detective character, clued in by visuals to give you the sense that you’ve flown into his body or see his shadow follow you as you walk about in space. Other times, the user is brought outside the body of the detective, floating above as some kind of watchful sidekick. This process is repeated several times throughout the experience.

By using wayfinders as the primary interaction, I noticed that as opposed to other VR pieces where I usually spend time constantly circling around to see everything the 360 construction can afford, I only looked around if the next arrow wasn’t already in my view or if there was a particularly beautifully drawn scene. I was more interested in passing through each scene, getting to each next point as quickly as I could to see how the space would change. As a user, we don’t really understand what exactly it is that we are searching for beyond the next arrow yet feel compelled to continue pushing forward, even just to know what optical tricks and creative line work will come next.

Some things we read:

https://www.inverse.com/article/14300-vr-game-sens-is-a-graphic-novel-come-to-life

http://fabbula.com/sens-vr-entering-drawn-world/

DIY-VR: desireVR

https://itp.nyu.edu/classes/diy-vr-fall2016/2016/10/17/tingly-feels/

DIY-VR: Westworld

I watched the first two episodes of the new HBO series Westworld. Originally to take a break from school work, but quickly realized that it provides and interesting case study for immersive reality.

In WW, an unnamed corporation has created a “fully-immersive” theme park known as WW. The line between what is “real” and not real is razor thin. From what we can tell this early on, the physical space that’s the park’s location is a real place, most likely out in America’s Western Region. WW is an augmented reality that has constructed sets and filled with constructed objects…and constructed people. Even at this future date storytellers (Mr. Sizemore in the show) are still trying to figure out how to craft fully immersive experiences. The mixture of both real and imaginary (yet physically tangible), is enough to make everything feel like a vivid dream.

What makes WW feel real more than anything else are the robots who are designed to appear human but still retain an unreality to their gestures and words that “real” people have no problem raping or killing them.There are no consequences to their actions, at least at the moment. How do the human guests decide to draw the line morally? Just like the video games we’re familiar with today, visitors to the park get to pretend they’re someone else, in another time and place. But as characters keep repeating through the show, the main draw of WW is for people to find out “who they really are”. The assumption being that without the confines and rules of society people will act out their hidden desires (very Freudian). So, it seems very appropriate that the setting of the park is the wild west (which at first puzzled me as overly anachronistic) but of course, “The Wild West” as an idea has always stood for a realm where all things go and there are no rules. It’s a descriptor used with the early internet, suggesting in its early days that this same attitude was pervasive on the net.

Returning to desires, I watched the trailers and read through descriptions of several of the other movies on the list and was interested to learn that David Cronenberg’s films (two of which are on the list) are entirely about repressed/fulfilled desires. This line of thinking ended up inspiring what I worked on this week.

EDIT: Interestingly enough, there was a Westworld VR experience at comic-con: http://mashable.com/2016/10/08/westworld-hbo-nycc-virtual-reality/#j3WtDBKi3qqt

Also, conversation about Westworld, and the advent of higher immersion games in VR: http://www.rollingstone.com/culture/news/is-hbos-westworld-really-about-video-games-w443910

A couple of choice quotes:

“Yet the park in Westworld, like virtual reality will someday be, is so realistic that we recoil at his actions. This immersion is the difference. In VR, you’ll kill someone and see their blood on your hands – and they will really be your hands – and watch the light leave their eyes. What will that do to us?”

“He talked about how no player would want to be as affected by killing a video game character as a Real Live Non-Sociopathic Human would be affected by killing a real person.”

“Once we spend more time inside these systems, we’ll realize that VR is a virtual space, not a corporeal one, and we’ll start to play with the spaces in a way that we’re kind of afraid to do right now. “

Understanding Networks: Wireshark Assignment

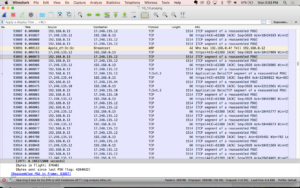

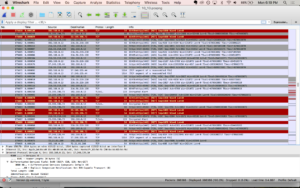

I did a couple different packet captures while figuring out how to use Wireshark. Doing it over the course of a couple hours made me appreciate the enormity of information passing through our networks – when I tried to shut the session down my entire computer froze up because it was trying to process around 4.6GB. I did a shorter one the next day (17 min), which gave me more manageable data with 366386 packets captured at 347.4 packets per second.

One of the first things I noticed was how many packets were TCP, with the occasional SSL. Looking at the color coding system. Wiresharks’s packet colorization rules made it really clear – scrolling through, I’d see mostly light purple interrupted by occasional bands of black and red. The red would mean a RST or reset flag was attached and I found this stackoverflow page that says the RST flag “signifies that the receiver has become confused and so wants to abort the connection” or that someone on the other end is trying to stop traffic. The black lines are TCP Dup Ack – TCP Duplicate acknowledgements and Fast Retransmissions. From what I can tell, it’s the network saying that packets are getting dropped.

I did WHOIS searches on a few of the IP addresses throwing RST and Dup Acks and mostly found Apple, Google, and Amazon…even if I wasn’t on any web pages owned by those companies. But also lots of links to cloud computing companies, consumer data analytics, ads, and content delivery networks (CDNs)

When I filtered out for just HTTP, there were 118 http packets out of 366386. Filtering for my ip address, I found that even though my roommate was home during that time I was doing all the web surfing. She, however, was showing up under UDP and SSDP (simple service discovery protocol) and one mDNS (multicast DNS) for a total of 74 packets. From what I can tell, it’s looking for network services? Filtering for my ip address, 99.9% of the packets were associated or 366023. So that’s 289 packets neither of our ip addresses can account for…

Listing all IP address in the capture, I found that 39 are from another ip address on the network (ending in 10), with packets labeled mDNS and IGMPv3 (a communications protocol used by hosts and adjacent routers on IPv4 networks to establish multicast group memberships). 74 packets were from the ip address ending in 1, which I assume is my router? That was mostly SSDP and some map requests associated with my ip address.

Overall, 228580 packets came from my ip address as a source (62.42%) and 137443 as destination (37.53%). The next six ips for both source and destination were apple, making up 26.41% and 46.74% respectively.

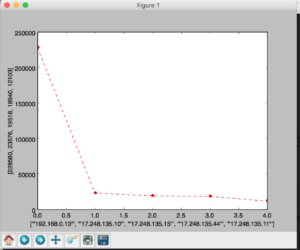

I tried creating a couple of bar graphs using google sheets, but it would only let me do just my HTTP (my other file was too large).

Ended up writing a python program that reads the source IP addresses, creates a dictionary with ip addresses as the key and the number of occurrences the value. I used matplotlib to plot the count of the top 5 source addresses.

Here’s the git repo: https://github.com/zoebachman/wireshark_analysis

HSF: Assignment 4

So, I did end up doing a 180 and have now switched to working with Paula. We will be exploring the issue of diversity in tech, specifically focusing on gender equality in the workplace and how company culture influences the technology and devices that we interact with on a daily basis

You can find our latest blog post here: http://www.itp.paulaceballos.com/

HSF: Assignment 3

This is a quick write up of some of my thoughts and research on Red Hook:

- Frame the why – as people, particularly urbanites, we’re becoming more distanced from our natural environment. But in reality it’s not that far away and with climate change no longer a future discussion but a present fact, how do we (re)conceive of our relationship to our environment? What does this mean for long-standing communities and neighborhood development? How can we imagine progress while mitigating our desires in order to keep ourselves safe?

- Idea that the future is now – climate change is already devastating our communities

- Example of resilience

- How do we conceive of a future post-change?

- How are they an example of civic/community engagement?

- Generally – what lessons can we as people and citizens learn?

- Users – new york city residents // others

- Research (to understand) –

- Flood protection barriers (safest one would block access to water)

- AECOM large development corporation (https://www.dnainfo.com/new-york/20160913/red-hook/red-hook-reimagined-with-massive-redevelopment-plan-subway-link)

- Red Hook – Old SW Brooklyn neighborhood

- Maritime/shipping history

- Geographically isolated (no subway)

- Largest public housing (check) in New York

- 90s – history of violence (school principal shot)

- Mostly flooded during Hurricane Sandy (2012)

- Now – waves of trendiness, local personalities resistant to large development

- Lots of community involvement – Red Hook Initiative at helm

- Simultaneously announced:

- Flood protection barriers (safest one would block access to water)

- ARCOS (sp?) large development potential with renderings (community is not down with this)

A couple of ideas that I’ve had:

Iteration 1:

Classic AR project of looking at spaces at different points in time

AR walking tour of redhook, mapped of areas past/present/future

Narration from local community members

Maybe documentary style of voice actors for historical figures?

Iteration 2:

Interactive website, a 3D map of redhook with a slider that shows redhook in three different times; multi-media, collection of photographs//videos

Iteration 3:

Narrative told by one person, most likely a child, in Red Hook. Recalling Sandy…thoughts about history and the future?

Narrating a 360 video? >> allow for the feeling of water rising

Iteration 4:

Place based installation, maybe different kind of AR? Activate by geo location? Tide Tone as part of this?

HSF: Circuitous

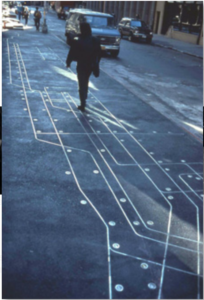

I recently found out about Francoise Schein’s project in Soho (1985) – mapping the subways lines.

A meditation on connectivity, layers of networks in our lives. This rings true today even more so.

So I thought of an update, remix/revision of this project that asked people to more actively make a connection between transportation infrastructure, movement and links and technology.

Close to half of nyc subway stations now have wifi. What about making use of that?

So, rough idea of some kind of phone app game that uses geo-location services for an active subway scavenger hunt, called: Circuitous.

Circuitous – a roundabout journey, just like the game. the idea is to visit different stations, answer questions/perform tasks and earn items. Not just any item, but electrical components. As you collect them, you try to build circuits – the more complex, the more points. Like a game like Ingress or PokemonGo, it’s a race against fellow players but also educational.

Understanding Networks: Traceroute Tones

Rather than visually illustrate the paths that packets take, I decided to use sound to reflect on the journey and slow down the time to understand each stop that information makes along the network. Found inspiration in modem dial-up sounds.

The intention of this program is to take in IP addresses gathered from a traceroute search, map them to audible frequencies and have them play as a short melody for each traceroute (one chord per IP address). Big ups to Aaron Montoya for help with the audio code.

As of this writing, the code is still in buggy, only able to play one IP address.

Would like to continue working on this so that I can automate the connection from terminal to p5.js and easily pass through/sonify the IP data.

Code here: https://github.com/zoebachman/MIDI-IP

DIY-VR: Reflection/Mundanity

I spent time this weekend playing around with the Ricoh Theta, understanding it as a photographic tool with both possibilities and limitations.

For one – if you don’t have a monopod, clamp or a surface to place the theta,you’re going to perpetually become part of the shot.

So I played with this relationship – placing the theta on top of my head, holding it next to my ear or out above me, letting it swing by my side.

So I played with this relationship – placing the theta on top of my head, holding it next to my ear or out above me, letting it swing by my side.

Viewing these photos, I keep finding it interesting that unlike regular photography, the Theta doesn’t replicate a photographer’s POV – literally, what the photographer sees the moment the picture is taken. In order to capture that sense of body in this sphere, the photographer needs to remove themselves from the scene so the viewer can step into the space.

——————————

To me, VR has this expectation that the content needs to be impactful in some way – visually, emotionally, physically. But I wondered if re-seeing spaces we’d previously been in or were familiar with would create the easiest sense of presence, since it’s already believable in its existence.

Playing with the Theta, I took a picture of my bedroom and then viewed that image in google cardboard. Suddenly I was transported…to exactly where I physically was.

But it was disorienting just because a few seconds had past, a few things were different. yet I could confidently navigate the space knowing that it’s real self was still around me. I gave it to my roommate and watched her as she looked at my room while in my room.

As I experimented with the Theta, I kept thinking about mundanity, the everyday and unremarkable. In some ways, that’s what the Theta strives for – it is the new selfie format and snapshot technology.

What does it mean to increasingly look at 360 photos/videos and have this become normalized? Are we going to constantly wanting to be having experiences that raise our pulse or can it be about the everyday? As someone in my Hacking Story Frameworks class mentioned, what excites him about VR/AR is not the mega-blockbuster entertainment possibilities but seeing his family and his home, and being able to share that experience with others. Theta allows us to take snapshots or a moment in time, it encourages us to use it like we would our smart phone cameras – immediately uploading to social media. If Theta can show an entire world, why pretend like we can stage it? Control it?

An experiment that I did to play with the idea of immersion was to play with mirrors to reflect imagery. Reflecting (no pun intended) on prior immersive experiences, I remembered this project by Theo Eshetu, Brave New World II, 1999.

It uses images from across the world refracted several times. Recently I’ve been listening to a book about multiple universes and the author credits the two mirrors in his bedroom as the inspiration for his curiosity. How many times have we marveled at the multiple versions of ourselves and our reality that we see when two mirrors come together at a certain angle? I was almost tempted to make a human-size kaleidoscope, like the one here:

I loved the worlds that the Theta App made when I used pictures of reflective surfaces.

But when I tried to recreate them using the app – of course the rest of the world got in the way. Rather than the typical use of mirrors in photography and film, to show us what would exist behind the viewer, we can simply turn around.

In some ways, this reflection and refraction is entirely possible with VR and 360.

Thinking back to including reflective surfaces, I played with using multiple mirrors, trying to angle them in such a way to create a series of infinite selfies and a subject caught between mirrors.

What I liked about this is that in someways it ends up giving a form to the viewer – like in the Arnolfini Wedding Portrait by Jan Van Eyck, we can see the creator – the Theta caught in the mirror. How does the person viewing react when they do not see their reflection? Do they see themselves as the Theta? As a ghost?

What I liked about this is that in someways it ends up giving a form to the viewer – like in the Arnolfini Wedding Portrait by Jan Van Eyck, we can see the creator – the Theta caught in the mirror. How does the person viewing react when they do not see their reflection? Do they see themselves as the Theta? As a ghost?

——

Thinking about presence as the creator, it’s a new experience to imagine the presence of the person who will be looking from all angles in a way I can only somewhat control. How the angles are going to effect their experience because they won’t have the separation of someone viewing photographs in a gallery, web page, book. They will be “inside” the photograph and expect to see things from “their” perspective – even though that perspective was created and constrained by someone else.

Slide show here: https://goo.gl/photos/MJeksU87moVxiEvd8

Learning Machines: Encoder

For our first assignment we were asked to create an encoder – something that would take a string and shorten the amount of data in the string, from Wikipedia:

For example, consider a screen containing plain black text on a solid white background. There will be many long runs of white pixels in the blank space, and many short runs of black pixels within the text. A hypothetical scan line, with B representing a black pixel and W representing white, might read as follows:

WWWWWWWWWWWWBWWWWWWWWWWWWBBBWWWWWWWWWWWWWWWWWWWWWWWWBWWWWWWWWWWWWWW

With a run-length encoding (RLE) data compression algorithm applied to the above hypothetical scan line, it can be rendered as follows:12W1B12W3B24W1B14W

This can be interpreted as a sequence of twelve Ws, one B, twelve Ws, three Bs, etc.

Having played with image manipulation using Python before, I thought I’d try to encode a black and white image. Using the Python Imaging Library (PIL) I’d start by getting the image’s pixel information (a set of lists within a larger list), map the pixels to B or W and put them into a new list, then compare each item in the list and count/illustrate runs of Bs and Ws.

I got to a point where I was getting runs, but my counting logic seemed slightly off (when to increase the count, mostly). Eve and I got together and compared code, collaborating on an encoder with assistance from Kat Sullivan. Eve’s code and mine had as similar comparison and counting logic, but meeting with Kat proved useful because she showed us that rather than compare the first item to the one after, we could do it to the one before since when we got to the end of the list, there’d be nothing to compare it to.

The version Eve and I worked on is here:

Taking what we learned, I went back to my image encoder to improve it. The first problem I had on my hands was that I was wrong to assume that my black and white image was ONLY pure black and white pixels – in fact, there were some pixels that were neither. Greyscaling was an option – but would give me a range of values from 0-255 so in general, mapping to the alphabet was out of the question.

I adapted the code we worked on to use a specified image as input.

Code can be found at: